Commission Investigates AI Finance Risks Amid Finalizing Milestone Law

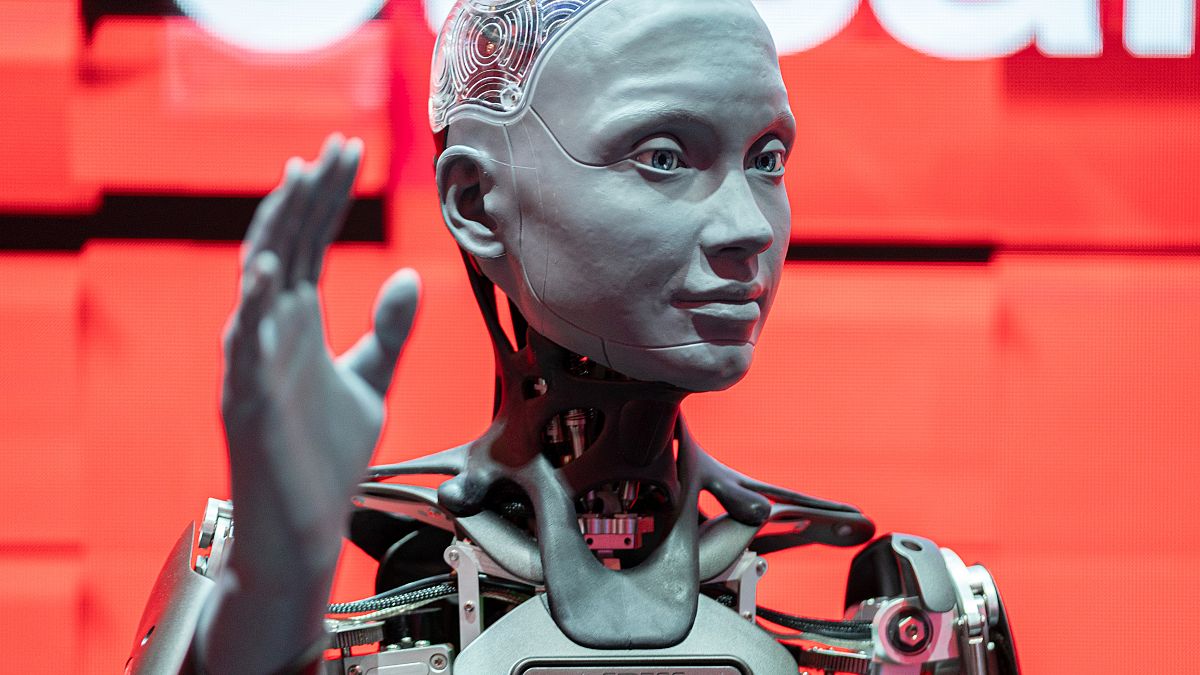

Officials Warn About Consequences of Overreliance on Robots

Key Concerns Highlighted by Experts

- Potential Bias—Robots can inherit or amplify the prejudices present in their training data.

- Panic and Uncertainty—Widespread automation raises fears that people may lose control over crucial decisions.

- Incorrect Guidance—If a robot’s recommendations are flawed, it can lead to harmful outcomes.

Factors Driving the Caution

Governments and technologists point out that several conditions make excessive robot use risky:

- Limited oversight on how algorithms learn.

- Rapid deployment before thorough testing.

- Insufficient transparency into how decisions are made.

Potential Impact on Society

When robotic systems dominate decision‑making without human checks, the following issues may arise:

- Systematic disparities in service delivery.

- Public mistrust in institutions that rely on automation.

- Cascade failures if a robot errs in a critical context.

Recommendations for Policy Makers

To mitigate these risks, experts suggest:

- Rigorous testing and validation before public rollout.

- Clear accountability frameworks assigning responsibility to developers and users.

- Ongoing monitoring and audit of robot performance.

- Inclusion of diverse datasets to reduce bias.

Conclusion

While robots hold promise for efficiency, officials emphasize that unchecked use could lead to bias, panic, and poor advice. A balanced approach—combining human judgment with robotic assistance—remains essential for ensuring safe and fair outcomes.

European Commission Calls for Closer Guidance on AI in Finance

The European Commission has highlighted potential risks and uncertainties surrounding the use of artificial intelligence (AI) within banking, insurance, and securities markets. A consultation released on 18 June underscores the need for additional clarity as the new AI legislation comes into force.

Key Points of the Consultation

- AI Act: The EU’s groundbreaking AI Act will become fully operative in May 2025, setting safety and non‑discrimination standards for emerging technologies across the economy.

- High‑risk sectors: The regulation earmarks high‑risk areas—health, law enforcement, recruitment—to receive enhanced oversight through automated tools.

- Financial sector focus: Officials emphasize that finance, already heavily regulated, could face substantial penalties if AI applications do not align with these new rules.

- Call for input: EU Commissioner for Financial Services Mairead McGuinness urges stakeholders to contribute feedback on how the AI Act can harmoniously integrate with existing financial regulations.

Statement from Mairead McGuinness

“The AI Act, combined with the current regulatory framework in the financial sector, offers a robust foundation that supports technological innovation,” she said. “We invite the community to share insights in what is a rapidly evolving and critically important arena of technological progress.”

Implications for the Financial Industry

With finance’s reputation for stringent oversight, the Commission stresses that the stakes are high: mistakes could result in severe financial repercussions. Emerging AI tools must therefore be scrutinized to ensure compliance, safety, and fairness across all financial services.

Credit checks

EU Scrutinizes Financial Technology Use

Brussels is once again pressing for clearer regulations on sensitive financial tools—particularly those that assess individual creditworthiness—highlighting concerns that current frameworks may be insufficient.

Context from 2022

- In 2022, the EU adopted the Digital Operational Resilience Act (DORA), a set of rules aimed at preventing data breaches and market instability caused by banks relying on a limited number of unregulated cloud providers.

- during that period, regulators warned that heavy dependence on a handful of data centers could disrupt operations and erode consumer confidence.

Current Fears: Algorithmic Herding

- The Commission now expresses concern over the “herding” effect, where multiple financial institutions make identical decisions using a shared IT infrastructure.

- This uniformity could trigger amplified price movements and intensify market concentration, thereby exposing the system to systemic risk.

- Additionally, the consultation notes the danger that automated systems might deliver inaccurate results, further destabilizing markets.

Implications for the European Financial Landscape

These developments underscore the EU’s commitment to safeguarding markets against the unintended consequences of rapid technological adoption. By tightening oversight, the Commission seeks to preserve market integrity while still encouraging innovation in financial services.

Nonsense?

Potential Pitfalls of General‑Purpose AI in Finance

Hallucinations and Misguidance

General‑purpose AI systems can occasionally generate “hallucinations”—responses that are nonsensical or factually wrong. This issue is familiar to anyone who has tried to get a straight answer from ChatGPT, yet it raises significant concerns for robo‑financial advisors, whose legal duty is to provide advice that truly serves their clients’ best interests.

Regulatory Challenges

The European Commission recognizes both the opportunities and the risks posed by AI:

- On the plus side, AI can detect suspicious trading patterns that might hint at market abuse.

- Conversely, poorly constructed training datasets can lead to wide‑scale discrimination, especially when algorithms decide on critical financial decisions.

Data‑Driven Discrimination

While the EU’s highest court ruled in 201 that charging men higher car‑insurance premiums was unlawful, an automated pricing algorithm might still undiscriminately penalize certain genders or ethnic groups. The catch is that no human can fully explain the logic behind these algorithmic choices, making it difficult to ensure fairness or compliance.